Herkules

Julian Rakuschek - 2023-04-11 - TU Graz

Web Development

Course Management

Automated testing

Teaching

Herkules is an automated assignment testing system for the course Fundamentals of Geometry Processing (FGP) which is the result of my bachelor thesis supervised by Dipl.-Ing. Alexander Komar, BSc and Assoc.Prof. Ursula Augsdörfer, MSc PhD.

In FGP students need to submit four assignments in which they program typical Geomtry Processing tasks in C++. These assignments can be automatically tested for correctness and efficiency by comparing it with the tutor reference solution.

Four Fundamental Issues

In the beginning years of FGP, automated submission testing was accomplished via a gitlab pipeline, but this pipeline had some severe issues:

- The pipeline was extremely slow, because the pipeline had to compile the whole student code for every single testcase separately.

- Testing was insecure, because the code was not executed in an isolated sandbox.

- Only one submission can be tested at a time.

- Tutors had a hard time tracking the progress of students.

The assignment testing system presented in my thesis solves all of these issues and provides some even more powerful features to the teaching staff. The first issue was tackled by employing the Google Unit Testing library which is a framework used to write testcases in C++. This immediately solves the compiling issue, because the submission now only needs to be compiled once. Furthermore, through partial compiling, the testing system only needs to compile student code while the pure framework code is already pre-compiled.

The Google Unit Test library offers some more advantages, for instance we can now load the mesh once and use it in all subsequent testcases. The unit tests are also much more readable, an example is shown below:

TEST_F(MeshDecimate, AverageNormal) {

prepareFaceCalculations(student_mesh, reference_mesh, true, true, false);

for (std::size_t vertex_id = 0; vertex_id < reference_mesh->getVertexAmount(); vertex_id++) {

auto stud = student_mesh->getVertex(vertex_id)->calcAverageNormal();

auto ref = reference_mesh->getVertex(vertex_id)->calcAverageNormal();

auto diff = std::abs((stud - ref).abs());

check_vector_distance(stud, ref);

EXPECT_FALSE(isnan(diff)) << "AverageNormal at Vertex ID " << vertex_id << " is NaN";

}

}

The second issue, tests being insecure, was alleviated by employing docker containerization. This means that every submission is tested in an isolated sandbox. Therefore, the submission has only a very limited view on the file system, it cannot connect to the internet and certain Syscalls are forbidden that are not required in the assignment.

The third issue was posing a huge problem. Imagine if one student had submitted an endless loop by accident and the timeout was generous, then all other students had to wait. This issue was solved by implementing a scheduler which provides a queue. On every push to the repository, the student is enqueued. The scheduler has a pool of worker threads and once a worker is free, the scheduler assigns it to the next student in the queue. This follows the so-called airport principle, where you have one long queue and multiple counters. The queue itself lives in the Redis key-value store which is a very helpful service that allows the scheduler and the backend of the website (next section) to communicate.

The scheduler is perhaps one of the most valuable results from this thesis as I could already use it in three other projects! I have provided a reduced version of the scheduler in a separate pensieve entry.

The final issue, result presentation, was solved by setting up a complete web stack for the testing system which is explained in the next section.

Website

One major goal of the testing system is the presentation of the results to the student in a user-friendly manner. This includes avoiding having to create a new user account at all cost. I got inspired by the system used in the Computergraphics and Computer Vision courses that enables students to sign in via their TU Graz account. And therefore we managed to provide a login system for students where they can sign in via their TU Graz account. This also greatly avoids unncessary complexity in the testing system. The entire web application is organized as a classic full-stack application with Svelte in the frontend and Flask in the backend which serves as an API for the frontend.

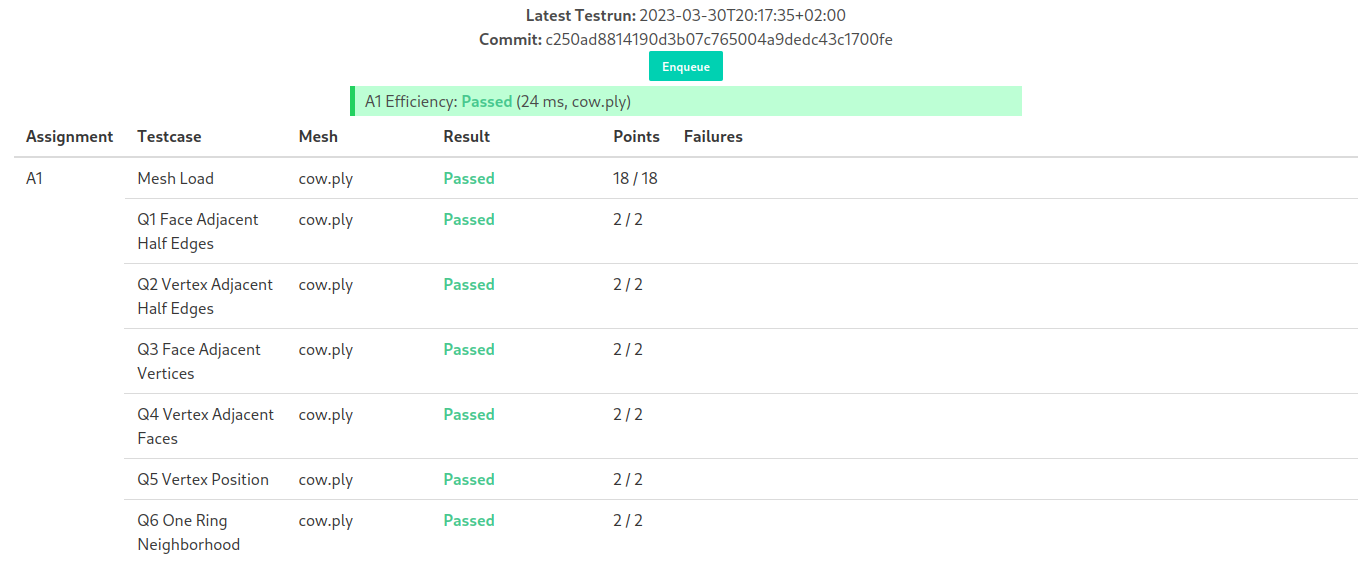

Once the student logs in, they can investigate the following table to check their results:

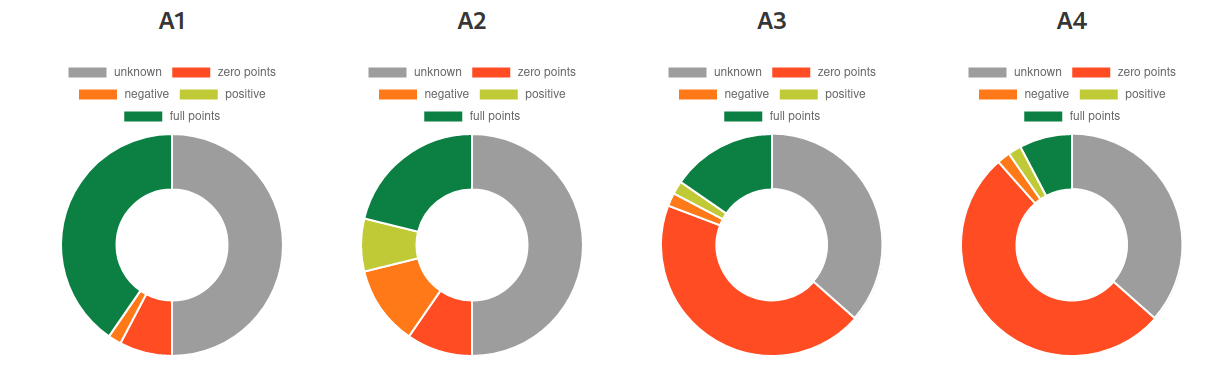

The failures column currently shows an ugly output from the Google Test Library, this is part of the future work section. The testing system also provides a dashboard for the tutors where they can check the assignment progression (status unknown means that the student never pushed something):

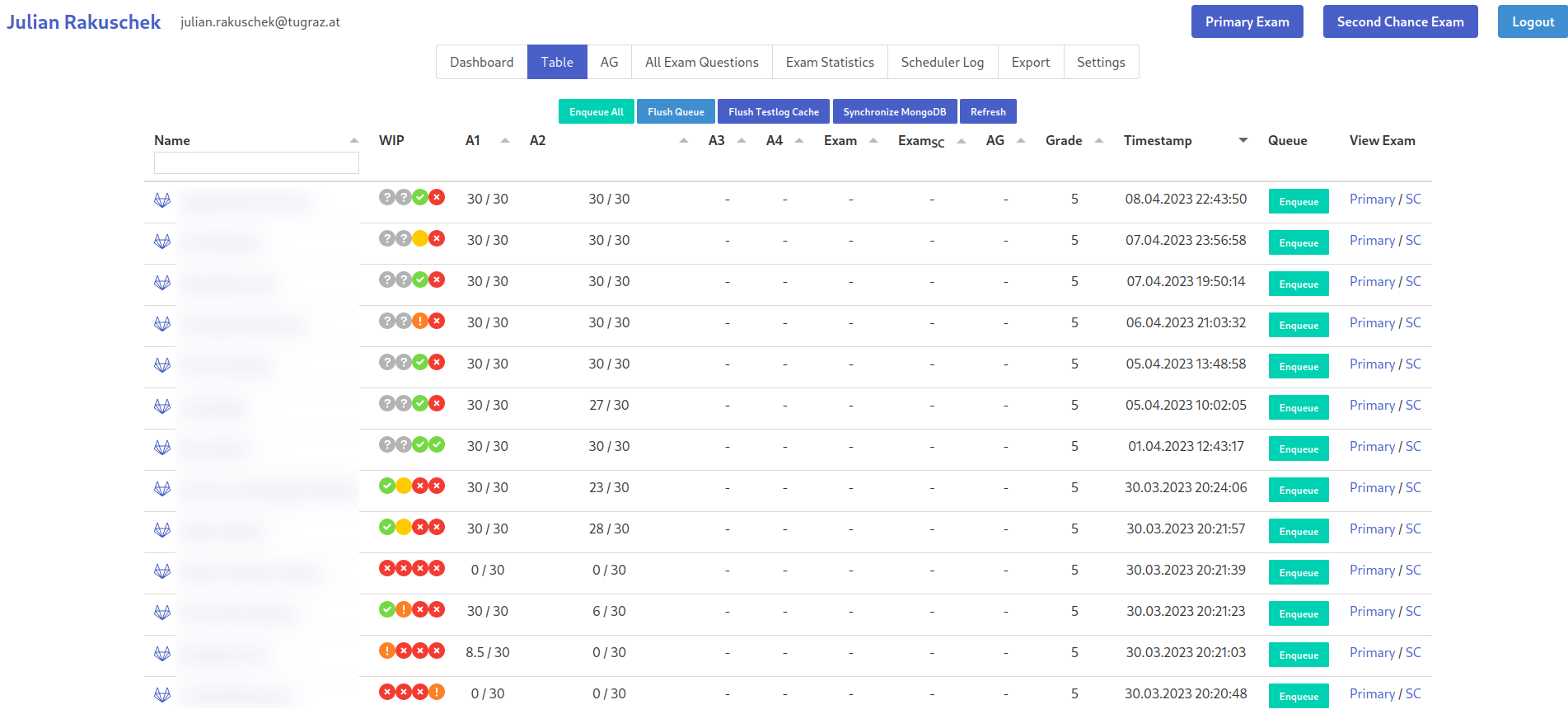

Furthermore, the tutors can check on each participant's individual status by checking this table:

Online Exam via Herkules

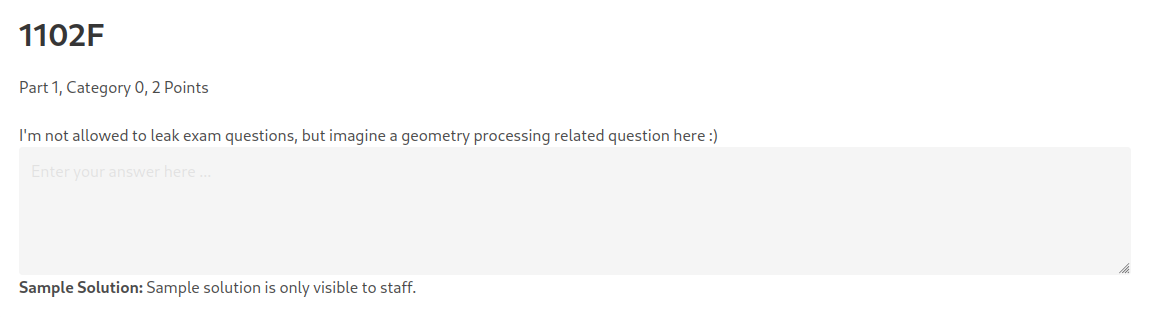

Besides the assignment submission testing, online exams can be conducted via the Herkules system as well (not covered in the bachelor thesis). The reason is that TeachCenter does not allow a more complex distribution of questions which is why we programmed our own exam environment. Questions can be pure text, MC, SC or mixed. One core feature is that the exam is saved after every change such that the probability of loosing information is minimal. Furthermore the grading of the exams is highly convenient since we can directly display a sample solution as shown below:

Future Work

Although the system is quite complete, there are still a number of issues that exist:

- We would like to serve more meaningful failure explanations to students

- Only comparing the student submission with the reference solution limits the degrees of freedom in the implementation.

- In the online exam it would be helpful to prompt students to mark something directly on the presented image.